Contents

Introduction: The Rise of Digital Warfare Through Social Media Misinformation

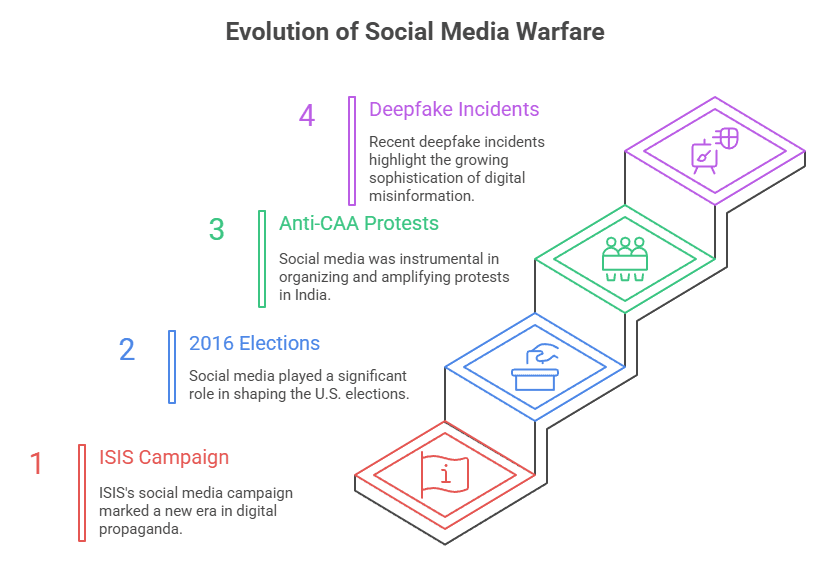

The year 2014 marked a turning point in how misinformation on social media could reshape global conflicts. When ISIS launched the hashtag #AllEyesOnISIS, they didn’t just announce their Iraq invasion – they weaponized social media platforms to spread fear and chaos across the digital landscape1. This wasn’t just traditional warfare; it was misinformation spread on an unprecedented scale that contributed to the fall of Mosul by manipulating public perception through online social networks.

Just as radio broadcasts changed the dynamics of World War II, ISIS’s success in spreading misinformation through social media altered how the world understood fear and conflict. The spread of misinformation on social platforms has ushered in a new era where digital and physical warfare merge, raising critical questions about how information and misinformation shape modern conflicts.

Carl von Clausewitz once said that “war is politics by other means”2. Today, social media platforms have become the primary battleground where misinformation spread amplifies real-world violence. From gang disputes to international tensions, online misinformation now plays a crucial role in shaping conflicts. The algorithms that control social media feeds have gained unprecedented power to influence public opinion through the widespread dissemination of misinformation.

Understanding how misinformation on social media platforms operates is essential for recognizing the true scope of modern digital warfare. Every person using social media becomes a participant in this conflict, whether they realize it or not.

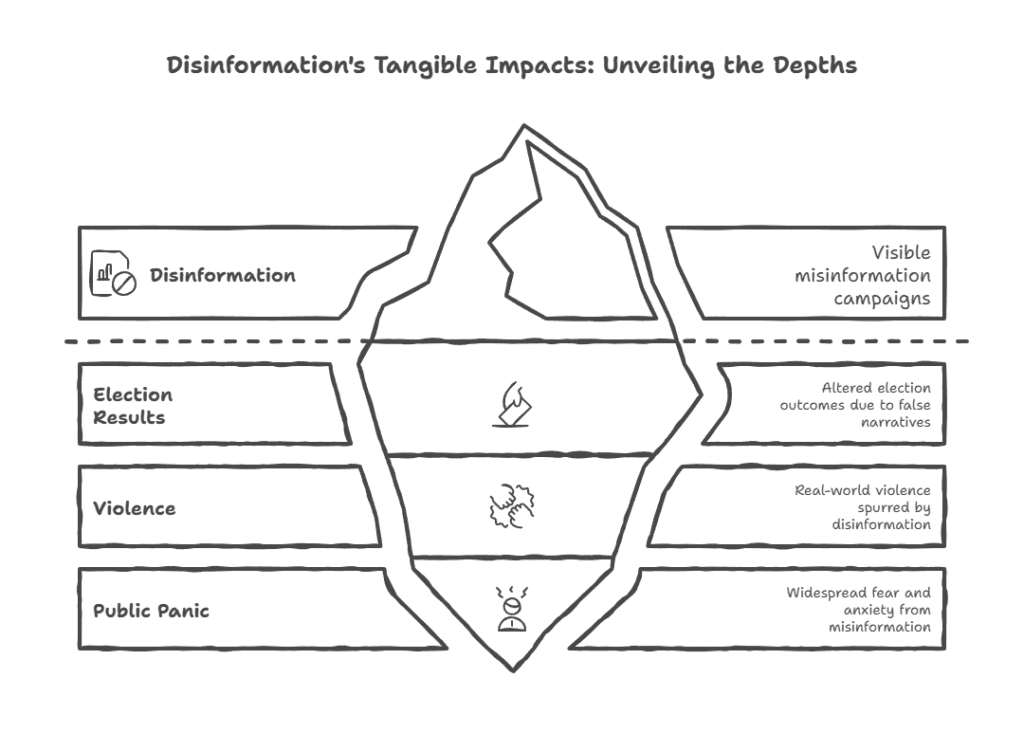

The Devastating Impact of Misinformation: Real-World Consequences

Political Misinformation and Electoral Manipulation

The spread of misinformation during critical events has proven capable of altering entire political landscapes. During the 2016 U.S. Presidential Elections, fake news on social media significantly influenced public discourse and voting patterns3. The misinformation problem became so severe that it fundamentally changed how people consume and trust information.

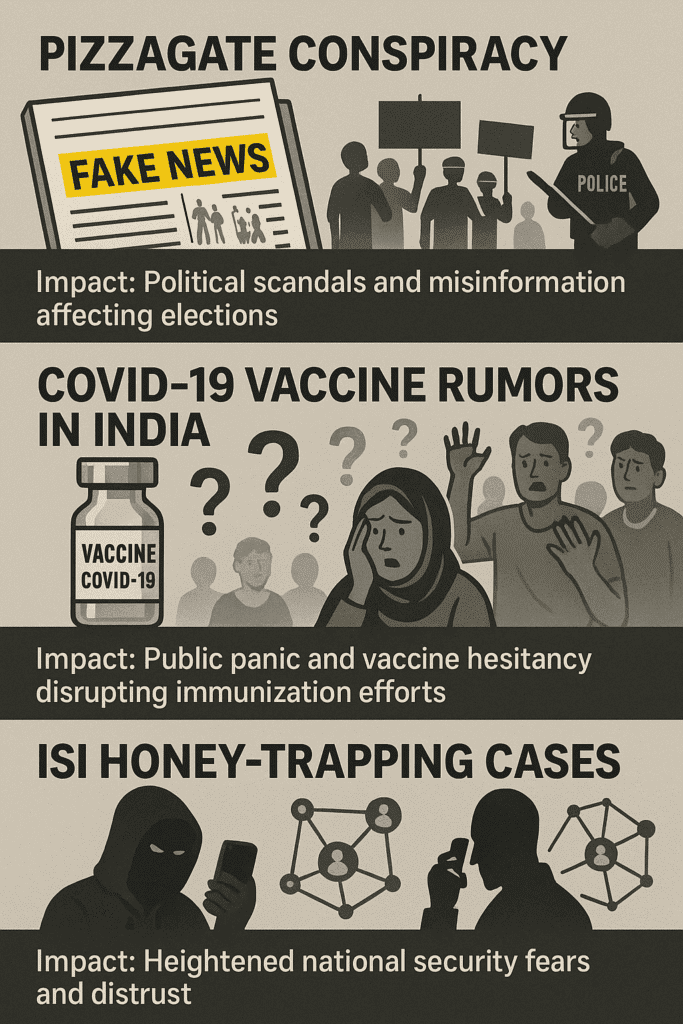

One of the most shocking examples of political misinformation was the #Pizzagate scandal4. This health-related misinformation falsely alleged a non-existent crime ring involving Hillary Clinton. The hoax gained 1.4 million Twitter mentions, demonstrating how misinformation spread on social media can reach massive audiences. The consequences were terrifyingly real – Edgar Welch arrived at a pizza restaurant with a weapon, believing the false information he had consumed through social media posts.

In India, misinformation during the COVID-19 pandemic included false vaccine claims and misleading content during anti-CAA protests. This health misinformation on social media showed how vaccine misinformation can undermine public health emergencies and create dangerous situations for communities.

Extremist Recruitment Through Targeted Misinformation

Social media companies face constant challenges from extremist groups who exploit their platforms to recruit vulnerable individuals. These groups use carefully crafted misinformation and disinformation strategies to manipulate perceptions and radicalize users. ISIS recruitment strategies exemplify how online misinformation can be weaponized to influence and recruit followers globally.

International Espionage and Information Warfare

Pakistan’s intelligence agencies, ISI and MI, have developed sophisticated methods for spreading misinformation through social media platforms. They use honey-trapping techniques combined with fake news to gather intelligence and spread divisive content. ISI focuses on creating memes about caste, elections, and Kashmir to damage India’s international reputation, while MI concentrates on extracting information about Indian Army movements through Facebook friendships and WhatsApp communications.

The Arab Spring demonstrates how social media can orchestrate massive political movements5. While this shows the positive potential of social platforms, it also highlights how easily misinformation in social media can be used to manipulate public sentiment and organize political action.

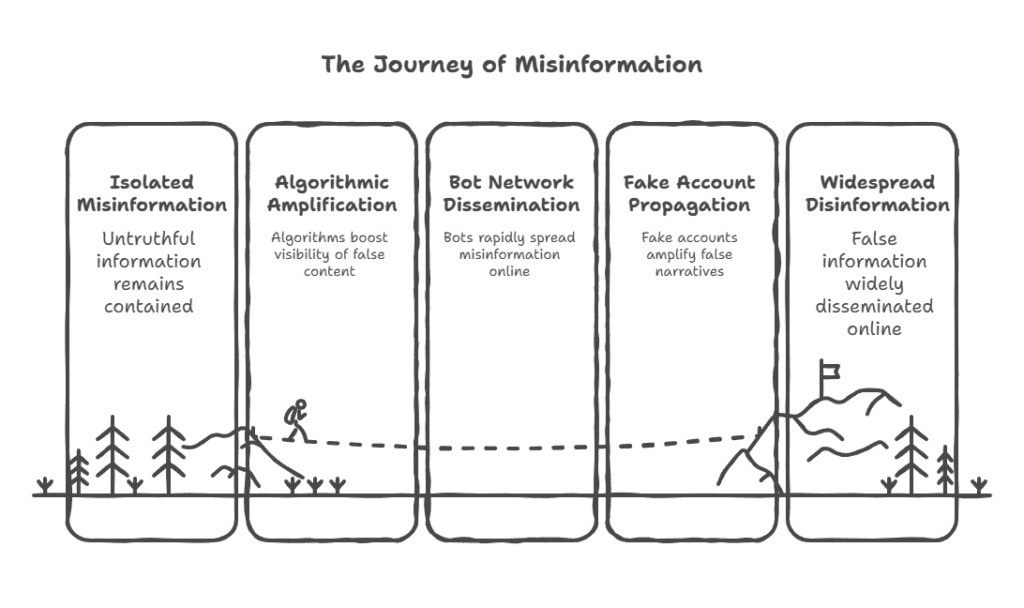

How Misinformation Spreads: The Mechanics of Digital Deception

Algorithmic Amplification of False Information

Social media algorithms prioritize engaging content, which often means controversial or emotionally charged material gets wider distribution. Anwar al-Awlaki’s radical YouTube lectures reached millions of viewers and inspired violence, including the 2009 Fort Hood shooting6. The algorithmic suggestions that promoted this health-related misinformation and extremist content show how social media has changed the way dangerous ideas spread.

Bot Networks and Fake Accounts

The misinformation problem is made worse by the widespread use of bots and fake accounts that amplify trends and spread false information. Facebook admitted that Russian interference through bot networks reached 126 million users during U.S. elections7. These patterns of misinformation show how social media data can be manipulated to create artificial trends and influence public opinion.

Exploiting Social Divisions

Misinformation in social networks often exploits existing societal divisions to fuel discord. During the anti-CAA protests and Bengaluru riots of 20208, content on social media was specifically designed to inflame tensions and create violence. This shows how information disorder in social media can have immediate and dangerous real-world consequences.

The Psychology Behind Misinformation: Why False Information Spreads

Echo Chambers and Confirmation Bias

Social media users naturally tend to connect with others who share similar beliefs – a phenomenon called homophily. Combined with confirmation bias, where people favor information that supports their existing beliefs, this creates dangerous echo chambers. These social media feeds reinforce and amplify pre-existing viewpoints, creating personalized realities that distort public consensus.

Examples like #Pizzagate9, COVID-19 misinformation, and vaccine misinformation illustrate how these psychological factors contribute to the widespread dissemination of misinformation. The impact of misinformation in these cases shows the urgent need for media literacy education and better platform regulation.

Radicalization Through Social Media

The echo chambers created by homophily and confirmation bias can escalate into extremist ideologies, causing serious social divisions. Anwar al-Awlaki’s online radicalization campaign10 used social media to reach and radicalize millions of people. The platform’s role in spreading misinformation and extremist content contributed to lone-wolf attacks worldwide, showing how online social networks can be weaponized for terrorism.

State-Sponsored Information Warfare

Countries like China and Russia have developed sophisticated strategies for using social media for psychological and geopolitical influence. China’s “three warfares” approach emphasizes psychological, legal, and public opinion manipulation through misinformation research and targeted campaigns. Russia employs negative propaganda through social media platforms to create societal division, as seen during the Euromaidan Revolution11 and the 2016 U.S. Presidential elections12.

The Cambridge Analytica scandal13 revealed how social media data could be harvested and used to influence political outcomes. India’s 2019 general election was labeled as “India’s first WhatsApp elections” due to the extensive use of misinformation on social media platforms for political propaganda14. Despite Facebook’s efforts to review accounts, misinformation persisted due to language complexities and the challenges of misinformation detection at scale.

The Democratization of Information and Its Consequences

The accidental revelation of Operation Neptune Spear through social media by Sohaib Athar (@ReallyVirtual)15 marked a significant shift in how news spreads globally. This incident highlighted social media‘s ability to challenge traditional journalism and unintentionally expose intelligence operations. The blurring lines between observers and participants on social platforms raise serious concerns about ethics and privacy.

Government and Regulatory Responses to Combat Misinformation

Indian Government Initiatives

The Indian government has implemented comprehensive policies to address the misinformation problem. The 2021 I.T. Rules16 require social media companies to have compliance officers and implement content monitoring of social media. The National Digital Health Mission (NDHM) ensures secure sharing of medical data, while Aadhaar provides unique identification despite ongoing privacy concerns.

However, implementing uniform regulation remains challenging due to India’s linguistic and cultural diversity. The government continues to work on balancing innovation with regulation to create comprehensive policies that protect individual rights, cybersecurity, and data privacy in our rapidly evolving digital landscape.

Technology Company Responses

Major social media platforms are taking significant measures to address the misinformation problem. Facebook has partnered with multiple fact-checking organizations, including AFP-Hub, BOOM Live, Fact Crescendo, Factly, The Healthy Indian Project, India Today Fact Check, NewsChecker, NewsMeter, Newsmobile Fact Checker, The Quint, and Vishvas.News17 to implement third-party fact-checking systems.

Google’s “Information Panel” is specifically designed to combat misinformation, while Twitter’s Birdwatch program18 focuses on community moderation to identify misleading tweets. Programs like TDIL (Technology Development for Indian Languages) run by MeitY19 help with local-language content on social media moderation.

Despite these efforts, the rapid growth of social media content and evolving misinformation spread continue to pose significant challenges. Continuous collaboration, technological advancement, and community engagement remain essential to combat misinformation effectively.

Civil Society and Educational Initiatives

Civil society organizations have launched impactful campaigns to reduce the spread of misinformation. Google and Tata Trusts’ ‘Internet Saathi’ initiative20 educates rural women about online safety, while organizations like Social Media Matters21 conduct workshops on digital literacy.

Movements like #SafeOnline and #ThinkBeforeSharing promote safe online social networks and fact-checking practices. Groups like the Internet Freedom Foundation22 advocate for privacy laws and transparent content moderation policies. Collaborative efforts like ‘Digital Shakti’23 with WhatsApp focus on rural women’s digital literacy to counter health misinformation and foster informed digital citizenship.

Law enforcement has also adapted to these challenges. Countermeasures like Op-Sarhad have been implemented by the Rajasthan Police in coordination with intelligence agencies to combat misinformation and espionage attempts. However, significant challenges remain in countering honey-trapping schemes, emphasizing the need for better cyber hygiene and advanced detection tools.

Emerging Technologies and Future Threats

Open Source Intelligence (OSINT) and Social Media

Open-source intelligence has revolutionized information gathering by democratizing access to previously exclusive intelligence. The investigative journalism group Bellingcat used social media data to expose Russian involvement in the MH17 tragedy, demonstrating how information on social media can be analyzed to uncover important truths.

Analysis of misinformation through OSINT techniques has proven effective in aiding predictions. Analysts have successfully used social media data to predict North Korean missile and nuclear tests. However, the characteristics of misinformation in OSINT require careful consideration of ethical concerns regarding potential misuse.

Artificial Intelligence and Deepfakes

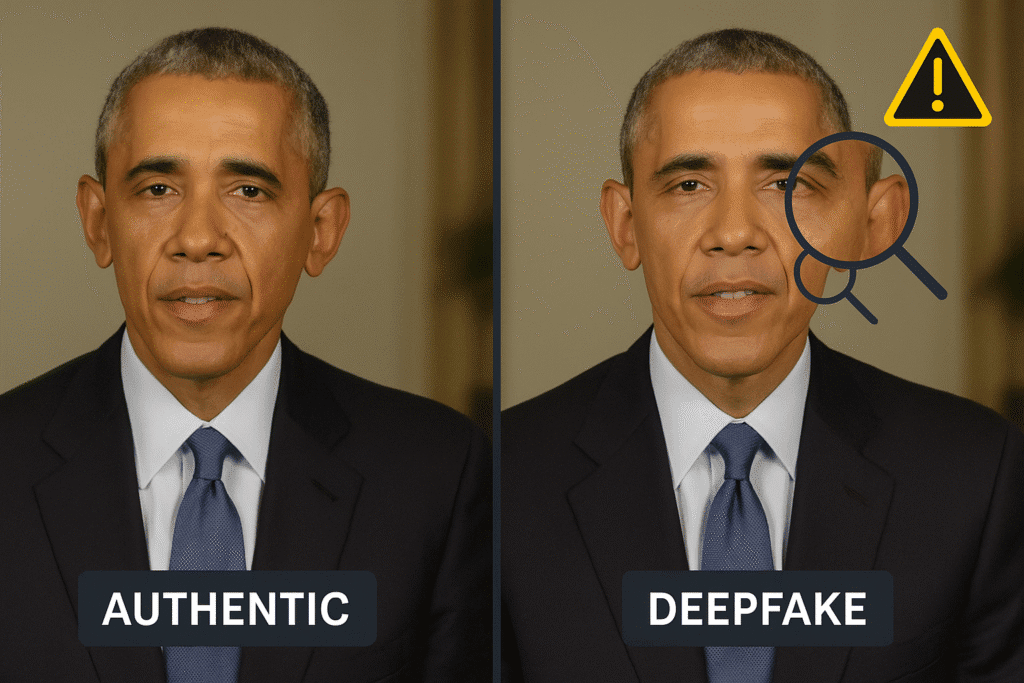

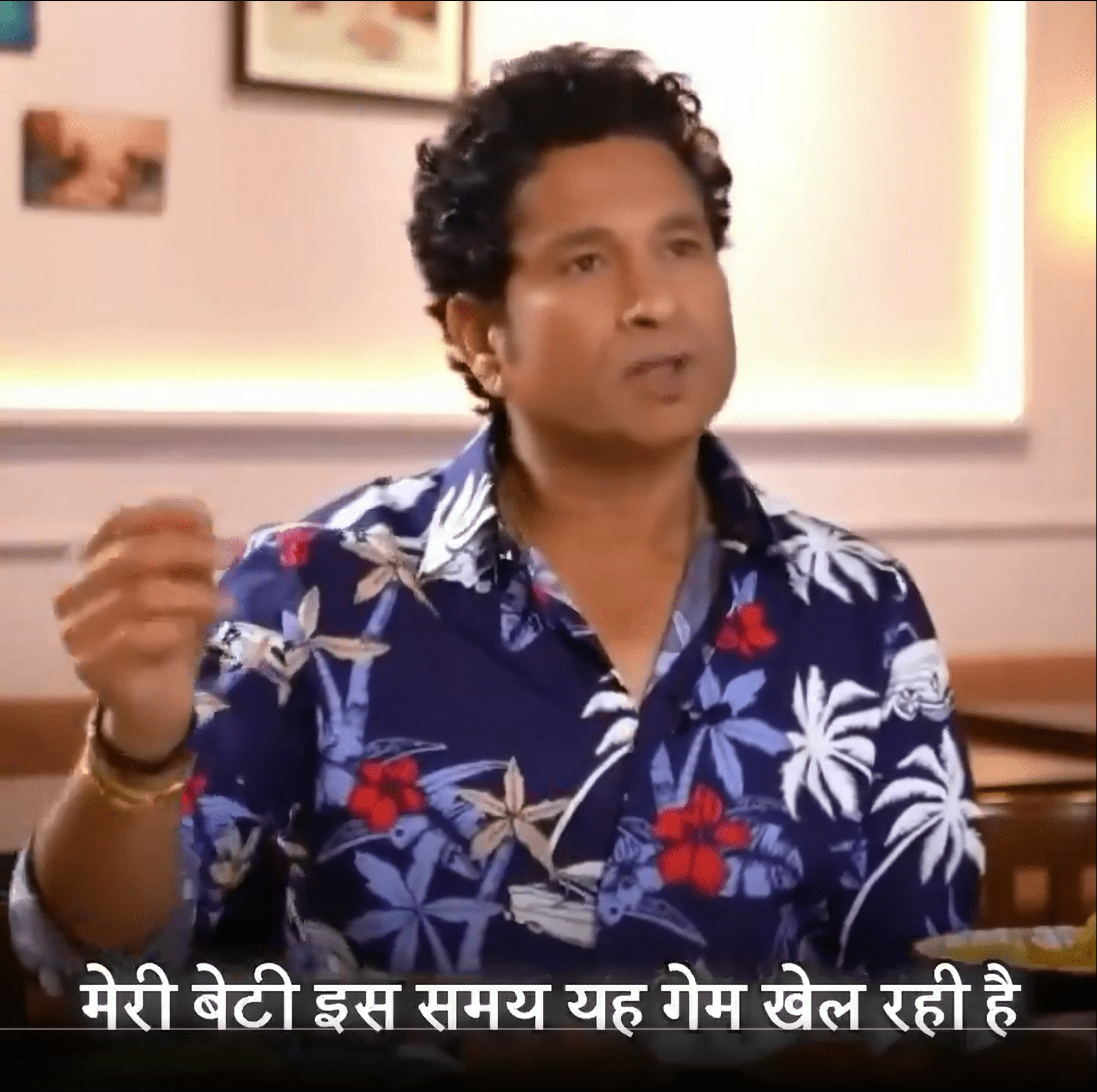

Advancing technology has made deepfakes increasingly convincing, posing serious risks for distorting public perception, particularly in political contexts. A deepfake video featuring a false speech by former U.S. President Obama24 exemplifies this threat, demonstrating why we must approach online misinformation with extreme caution.

The surge in AI-generated text significantly contributes to fake news and deceptive social media content. Advanced language models can produce human-like articles, tweets, and comments, escalating concerns about AI’s potential role in misinformation campaigns.

Emerging AR and VR technologies also show potential for misuse. While still in early stages, experiments with manipulating visual content suggest these technologies could create entirely fabricated scenarios designed to influence public opinion or deceive users.

Neural Networks and Information Processing

Neural networks process information similarly to human brains through hierarchical learning. These systems analyze vast amounts of social media data through multiple layers, resembling how humans process information. Google’s neural network autonomously identified cat-related patterns in YouTube screenshots, showcasing independent learning capabilities without explicit programming.

Social media platforms like Facebook employ AI for image analysis and content monitoring of social media, raising privacy concerns about object detection, sentiment analysis, and potential interventions. Neural networks are being explored for misinformation detection, but concerns arise about filtering false information without compromising free expression online.

Generative Adversarial Networks (GANs) create highly convincing deepfakes, raising alarms about AI-generated fake conversations and facial manipulation that fuel misinformation risks. Machine-driven communication tools (MADCOMs) can mimic human interactions, challenging our ability to distinguish between AI-generated and authentic human exchanges.

Collaborative Detection and Countermeasures

The research on misinformation has led to collaborative efforts to develop countermeasures for detecting deepfakes and AI-generated content. Technology companies and research institutions have jointly developed tools like Deepfake Detection Challenges to create algorithms capable of identifying manipulated videos, indicating proactive steps in the misinformation research field.

The ethical use of neural networks remains crucial due to their potential to deceive and manipulate human behavior, significantly impacting online social networks and information dissemination. GANs raise important ethical questions about content authenticity and the ongoing competition between fake content generation and detection networks.

Military and Strategic Implications

Training for Modern Digital Warfare

The convergence of physical and virtual conflicts highlights the need for military personnel to understand social media environments. Social Media Environment Information Replication (SMEIR) systems attempt to simulate online misinformation scenarios for training purposes. However, SMEIR’s limitations in replicating complex internet dynamics highlight the difficulty in countering disinformation and algorithmic control.

These limitations expose vulnerabilities in both civilian societies and military strategies. The impact of misinformation on military operations requires new approaches to information warfare and defense strategies.

National Security Considerations

Social media serves both as a tool for connecting people and as a battleground for global conflicts where misinformation runs rampant. The influence of misinformation on national security requires governments to prioritize social media in their strategic planning.

Military strategists must understand how algorithms prioritize engaging content and how this affects the spread of misinformation during conflicts. The role that social media platforms play in spreading misinformation requires new approaches to information security and psychological operations.

Solutions and Recommendations

Government Actions

Governments should prioritize social media security in their national strategies and promote information literacy through comprehensive public awareness campaigns. Media literacy education must become a cornerstone of educational systems to help citizens understand the spread of misinformation and develop critical thinking skills.

Policy makers need to work with social media companies to develop effective misinformation detection systems while balancing free speech concerns. The challenges of misinformation require nuanced approaches that protect democratic values while preventing harm.

Platform Responsibilities

Social media companies must prioritize ethics and transparency in their operations. They should invest more resources in content monitoring of social media and develop better systems to detect and combat misinformation. The algorithmic amplification of false information needs to be addressed through more responsible algorithm design.

Major social media platforms should collaborate more effectively with fact-checkers, researchers, and civil society organizations to reduce the spread of misinformation. They must also provide better tools for users to identify and report fake news and health misinformation.

Individual Responsibility

Individuals must enhance their information literacy and practice responsible content-sharing habits. Social media users need to understand their role in shaping online conversations and the impact of misinformation on society.

Before sharing content on social media, users should verify information through reliable sources and consider the potential consequences of spreading misinformation. Media literacy education should teach people to recognize characteristics of misinformation and patterns of misinformation in their social media feeds.

Conclusion: The Future of Information Warfare

The weaponization of social media represents one of the most significant challenges of our digital age. Misinformation on social media platforms has evolved from a nuisance to a genuine threat to democratic institutions, public health, and social stability. The spread of misinformation through online social networks will continue to evolve as technology advances and bad actors develop new techniques.

Social media has become the primary battlefield where information and misinformation compete for public attention. The influence of misinformation extends far beyond individual beliefs – it shapes elections, undermines public health emergencies, and can even contribute to violence and social unrest.

The problem of misinformation requires unprecedented cooperation between governments, social media companies, researchers, and individual users. We must combat misinformation through education, technology, regulation, and personal responsibility. The future of social media and its role in society depends on our collective ability to address these challenges while preserving the benefits of digital connectivity.

Media literacy education, improved misinformation detection technologies, and stronger collaboration between all stakeholders offer hope for addressing the misinformation problem. However, the characteristics of misinformation will continue to evolve, requiring constant vigilance and adaptation.

The negative impact of misinformation on society is clear, but with coordinated efforts, we can work to reduce the spread of misinformation and create healthier online social networks. The battle against misinformation is not just about technology – it’s about preserving truth, democracy, and social cohesion in our interconnected world.

Understanding that every user of social media platforms plays a role in this digital ecosystem is crucial. By promoting media literacy, supporting misinformation research, and demanding accountability from social media companies, we can help create a future where information on social media serves to inform and connect rather than divide and deceive.

Bibliography/Further Reading

- [1] “How Twitter Is Changing Modern Warfare.” The Atlantic, October 11, 2016.

- [2] “Clausewitz: War as Politics by other Means.” Liberty Fund.

- [3] “How Fake News Affected the 2016 Presidential Election.” LSU Digital Media Center.

- [4] “Pizzagate conspiracy theory.” Wikipedia.

- [5] “Arab Spring.” Wikipedia.

- [6] “2009 Fort Hood shooting.” Wikipedia.

- [7] Solon, Olivia. “Russia-backed Facebook posts ‘reached 126m Americans’ during US election.” The Guardian, October 31, 2017.

- [8] “Three dead after protests over a social media post turn violent in Bengaluru.” Frontline, August 12, 2020.

- [9] “Pizzagate conspiracy theory.” Wikipedia.

- [10] “Anwar al-Awlaki.” Wikipedia.

- [11] “Revolution of Dignity.” Wikipedia.

- [12] “How Fake News Affected the 2016 Presidential Election.” LSU Digital Media Center.

- [13] “Facebook–Cambridge Analytica data scandal.” Wikipedia.

- [14] Gupta, Rahul. “Clip, flip and Photoshop: Anatomy of fakes in Indian elections.” India Today, May 28, 2019.

- [15] “@ReallyVirtual.” Twitter.

- [16] “Ministry of Electronics and Information Technology Notification.” Government of India, 2021.

- [17] “Meta’s Third-Party Fact-Checking Program.” Facebook.

- [18] “Introducing Birdwatch, a community-based approach to misinformation.” Twitter Blog, 2021.

- [19] “Technology Development for Indian Languages (TDIL).” Ministry of Electronics and Information Technology, Government of India.

- [20] “Internet Saathi.” Tata Trusts.

- [21] “Social Media Matters – Team of Young, Feminist, Social Media Ninjas.”

- [22] “Internet Freedom Foundation.”

- [23] “Digital Shakti.”

- [24] “Obama Deep Fake.” Ars Electronica.

- [25] Singer, P. W., & Brooking, E. T. (2018). LikeWar: The Weaponization of Social Media. Houghton Mifflin Harcourt.

- [26] Stern, J., & Berger, J. M. (2015). ISIS: The State Of Terror. Harpercollins.

Absolutely right, this awareness is a responsibility for all of us, and I am committed to contributing my part.

Yes, Pandey Jee 🙏

Really appreciate the emphasis on educational initiatives to fight misinformation. It’s crucial we arm our students with critical thinking skills.

Absolutely agree Deepika. Worry about the kids getting the wrong info online. We need more awareness.

Absolutely agree—raising awareness, especially for kids, is essential today.

Thank you, Deepika. Teaching critical thinking in schools is the real defense against misinformation.

u say tech companies r responding, but are they really doing enough? seems like we still see tons of fake news everywhere.

That’s a key issue, Rajeev. Tech responses help, but the scale of fake news is still a huge problem.

Imagine getting your news from a meme and starting a political debate at the dinner table. Happens more than you think, lol.

So true! Memes and viral jokes have become a surprising source of news for many.

Found the segment on state-sponsored info wars insightful. But does this mean every piece of viral news could be propaganda?

Thanks, Anita. Viral news isn’t always propaganda, but it’s wise to check sources before believing or sharing.

hey vishal, was reading about how bots spread fake news. but how do these bot networks even start? like who makes them and for what exactly?

Bots are often created by organizations or individuals with the intention to influence public opinion or disrupt social discussions. They’re more common than you might think.

Spot on. Many bots are made for influence—by groups and sometimes individuals.

dude, do normal people ever make these bots or is it just big groups?

Normal people can make bots too, but bigger groups usually have more impact because of resources.

Great question, Sumeet! Usually, organized groups or individuals create bot networks to push certain agendas.